Over the past few years, In Sync Dance has continued to offer live video streaming as an option for family and friends who want to watch the ballet shows, but can’t make it to the show in person. As a result, I’ve continued to provide the streaming services and have grown my skills and equipment list along the way.

One of the biggest transitions was moving from an Blackmagic ATEM Mini Pro to a ATEM Mini Extreme ISO. The Extreme ISO allows recording all video inputs individually in addition to the final program output. We can then go back after the show and make a final recording to distribute to the dance families. With the original footage to remix from, if a mistake happened during filming, we are able to make corrections so the final long-term version is something we’re happy to have people watch again and again.

Let’s go over the control room setup based on the photo above:

On the right is a Behringer X32 mixer. The mixer is set up to take a copy by AES50 of all of the inputs from the school’s X32. I then mix the audio for the entire show to be appropriate for the stream without having to worry about affecting what the audience in the auditorium is hearing. Also on the table with the mixer is the equipment rack that houses the various computers I use for the production.

There are four monitors along the top row of the desk. First on the left is the general “control” computer monitor. This is where I run any applications for additional or more in depth control of my devices. The second screen is the multiview of the ATEM Extreme ISO, showing all camera and computer inputs. The third screen is the output of the ATEM Mini Pro, which I will describe in more detail shortly. The right screen on top of the rack is a Chromebook which is monitoring the final stream (the stream wasn’t active when the picture was taken).

On the desk level: on the left is a second screen for the control computer. This screen is commonly used for script notes. White keyboard is for the control computer. Next is the third screen for control. This is a touch screen, so useful for things like adjusting mixing or running additional cues. In front of the screen is a Streamdeck, used for triggering macros using Bitfocus Companion. The ATEM Mini Extreme ISO for the primary show switching. ATEM Mini Pro is the final item on the desk.

The program output of the ATEM Extreme is fed to the school’s SDI network, so performers backstage can know what is happening in the show and where in the script the show is. However between the Extreme and the SDI network is the ATEM Mini Pro. This is used so I can have an additional means of controlling the SDI network and in particular, I can overlay text for communicating backstage. For example, I put a countdown clock to show time that only the backstage can see.

The video stream to the Internet originates in the ATEM Extreme and is encoded in-device. OBS isn’t used for this event’s stream. The stream is carried over EventLive, which was suggested to us by the ticketing provider. EventLive is simple to work with and has offered us high reliability and video quality for an affordable price per show.

There are three computers in the rack. First is the control computer, running Companion and other device control software. The second is connected to the Extreme and provides video playback using VLC, titling using H2R Graphics Pro on a second HDMI output, and backstage text also using H2R on a third HDMI connected to the ATEM Mini Pro. The third computer is exclusively used for recording; OBS if needed for video, Reaper for multitrack audio.

For this show, we utilized 5 cameras. One static shot from the sound booth at the back of the auditorium. Two cameras were on tripods and staffed by high school students at the back corners of the auditorium. The final two cameras are the school’s Canon PTZ cameras, one located in the sound booth, the other down stage center. If you look closely at the top photo above, you can see my son in the background looking at the computer screen controlling the two PTZ cameras.

One very handy piece of equipment I picked up right before the start of this show is a Lumantek SDI to HDMI Converter with Display. I attached a 9v battery to the side of it and it allowed me a very compact way to test SDI cable runs without needing to carry larger converters, screens, and batteries to power them all. The projector I set up in the school cafeteria was at the end of a 200′ SDI cable run, which was a bit marginal for signal quality. I was able to use the Lumantek to validate all connections before setting up the projector.

That being said, for the upcoming show I purchased a SDI to fiber adapter set. So the long run to the projector will be over armored fiber instead of coax cable, which will be a lot more reliable.

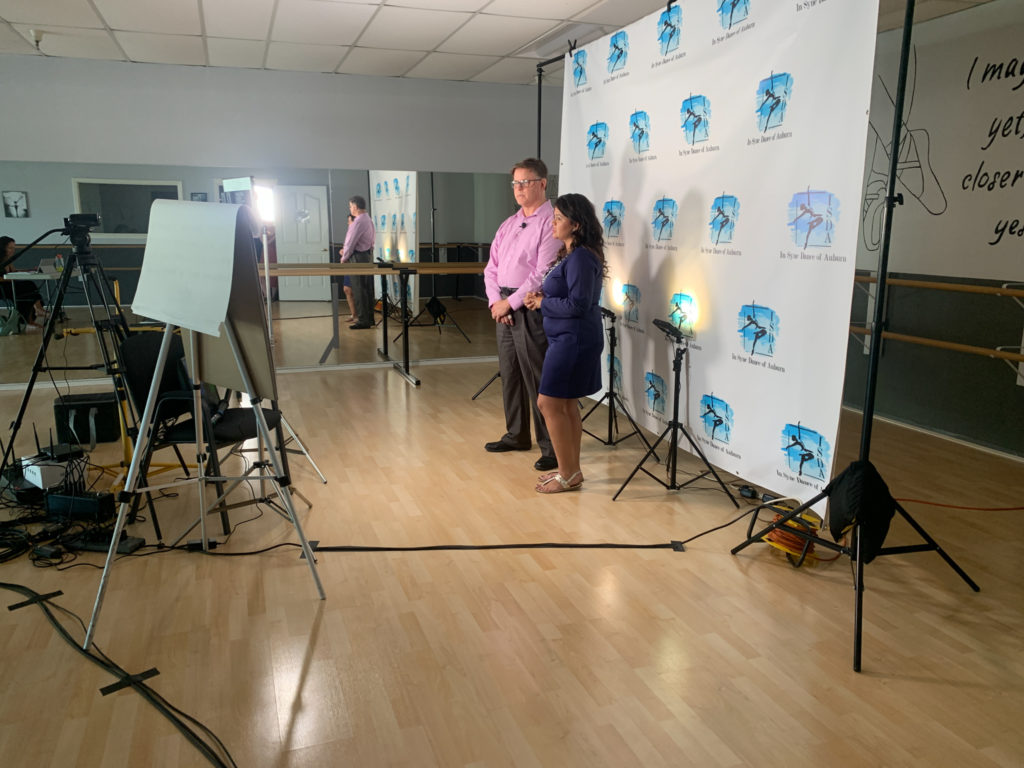

A final picture showing what the room looked like during setup on the rehearsal day:

Additional equipment in the background belongs to the high school. I do use the school’s cameras and wiring, but the rest of the gear is not used by me for the shows. I simply overlay my setup on theirs and utilize the shared space.